Split Testing To Gain Critical Insights: How to Approach Your Next Test

When attempting to figure out customer intent, it can feel like throwing a lot of marketing at the wall to see what sticks. But the act of studying what sticks might be more important than many realize. According to McKinsey, companies who pay attention to customer analytics are 23 times more likely to outperform competitors in acquiring new customers.

So, how do you arrive at analytics that are powerful? One of the most scientific approaches to generating new data is split testing.

The split test is a scientific approach to marketing, similar to the scientific method you learned about in high school: start with a hypothesis, test it, and weigh the results.

In this article, we will study the art (and science) of split testing and discover the best ways to rigorously test which of your marketing campaigns leave the greatest impact on your customers.

Table of contents

Split testing: Definitions and techniques

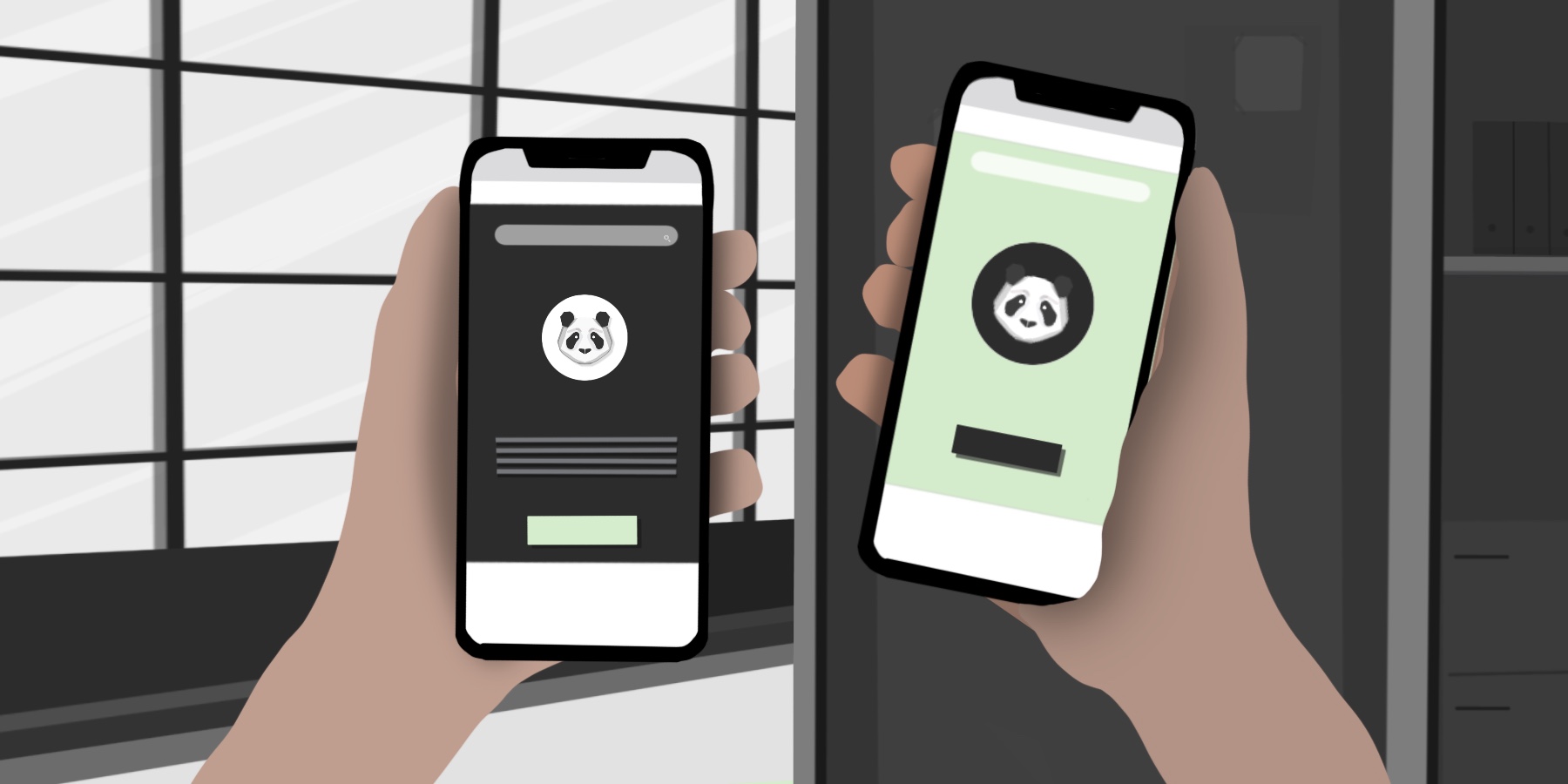

Let’s start with a definition: split testing runs two simultaneous marketing methods against each other to see which one works best. In a split test, the two methods—such as two versions of a landing page for the same project—might look significantly different. It’s one of the key ways to generate customer insights so your team can make data-driven decisions.

To many marketing teams, the terms split testing and A/B testing are synonymous. But there’s an important difference here: split testing is but one approach marketers can take during experimentation and testing.

The right testing method you choose depends on the variables you need to test, as well as the time and resources you have at your disposal.

In other words, a split test might compare two entirely different web pages. Other forms of A/B testing might compare two identical web pages with one variable (such as a headline) isolated for testing. Multivariate testing, another common form of testing, tests multiple variations of multiple elements in a programmatic and statistical manner.

Let’s dive deeper into the definitions of these three common types of testing to understand when you’d use each one:

- Split testing. Split testing runs two simultaneous marketing methods against each other to see which one works best. In a split test, the two methods—such as two versions of a social media creative for the same project—might look significantly different. It’s one of the key ways to generate customer insights so your team can make data-driven decisions. It doesn’t require as much time as A/B testing (or patience), but it’s a riskier move. This is because you don’t know specifically what’s driving conversions up—or down.

- A/B testing. Imagine you’re painting a landscape. If split testing is a big heaping dollop of blue paint to paint a sky, A/B testing is concerned with the details that bring your painting to life. A/B testing measures one change at a time (like a subject line), requiring significantly more time than putting out several changes or an entirely different experience at once. The benefit here is that you know exactly what impacts your metrics.

- Multivariate test. Think of multivariate testing as a subset of A/B testing, but you’re running two or more tests at a time. This type of testing creates exponential combinations with multiples of different versions, and because of that, it takes time. Multivariate testing also involves a significant amount of traffic, which is why they’re not always run in favor of the other tests.

If you have the resources and the traffic to handle testing multiple options at once, a multivariate test is a handy way to cut to the chase. If you’re optimizing a smaller offering, you might want to start with A/B tests.

On the other hand, you might run a split test to see which style of homepage outperforms the other, for example, before you nail down the specific messaging around your service or product. This is when you’d run a split test.

Top Tip: To find out more about running A/B tests, read our article on optimizing customer experiences 🐼

The benefits of split testing

Split testing helps you understand what your customers are thinking, which every marketer knows is critical to success.

There are plenty of ways to achieve this, of course. For example, you could:

- Run surveys

- Hold focus groups

- Poll your followers on social media

- Invite customers to email their feedback

- Check your SEO analytics for top visitor keywords

The brilliance of the split test, however, is that it requires none of the above. It relies on observation on what customers do rather than what they say.

You can only achieve so much with focus groups before the data becomes unreliable and non-predictive. This is because of a phenomenon based on built-in biases and poor memory recall that enables people to report different experiences to reality (usually without knowing they’re doing it).

Audiences might “say” they have certain preferences, but what decisions do they really make when they’re sitting at their computer and considering a purchase?

That’s what split testing asks while mapping actual behavior rather than reported behavior.

Before we dive into how to do it, let’s get more specific about these digital marketing benefits. Here’s what split testing can do:

- Identify customer pain points. Customers might not know their own pain points until they experience them, which is why split tests come in handy. For example, maybe customers say they love your prices—but when you split test your checkout page or sign-up form, you find that the higher delivery price cancels out this effect. Now you know that delivery price can be just as big a factor as the product’s price itself.

- Increase conversions from existing customers. It’s not always easy to generate more website traffic. But what you can do is optimize for the foot traffic you already have. Split tests help you choose more impactful marketing messaging to take advantage of the customers you’re already attracting.

- Gaining customer insights. Let’s take the “delivery price” example again. What if you used this insight beyond one product page? Baking the delivery price into the product pricing and offering “free delivery” can then improve every offer on your site. This helps you later optimize different page elements, dialing in the big changes before you find the small changes that optimize your conversion rates.

- Branding implications. The chief advantage of the split test? It will tell you what your customers are resonating with. What specific brand message seems to click with people? What does the “winning” asset on your split test tell you about what makes customers want to buy from you? Split testing helps you identify these insights so you can save them for later advertisements and brand messaging assets.

Top Tip: Learn how to turn customer insights into actionable decisions in our article on customer intelligence tips 🐼

How to run an effective split test

In the early stages, a split test might feel a bit like you’re trying a little bit of everything (video marketing campaigns or ebooks, a simplified website UX, new branding, etc.). Even early on, you can run your test to optimize the quality of the data you’ll glean.

What are your website visitors trying to tell you? Here’s how you find out.

Figure out what you’re testing first

Your first step is diagnosis. Now’s the time to consider a basic question: what do you want to test?

To begin, look at your existing data whenever possible: surveys, heat maps, session replays, website analytics. Chances are, you’re already sitting on a mountain of data. Use these assets to your advantage and find where customers are already pointing you. This will help you make educated guesses about your marketing assets.

Next, define the asset you want to test. Are you testing:

- A new landing page?

- Different designs on your web page?

- A new lead magnet, such as a free ebook?

- A new website?

- Introductory emails to your newsletter?

- Your checkout page? Your checkout messaging?

It may sound like a lot at first, but think of the testing process as a funnel: you’re starting with the bigger stuff and working your way down. Split tests help you test the broad, big-picture messaging at the top. Later, you can use singular A/B tests to nail the details.

Decide on your split testing tools

If you’re feeling stuck, there’s good news: you’re not reinventing the wheel. People have run split tests before, which means there are plenty of online platforms you can turn to. Let’s review some of the tools you can employ once you’ve decided on the asset you’re going to test:

- AB Tasty. As you can tell from the name, AB Tasty lets you test just about anything in your digital presence, from how your site appears on mobile devices to deciphering which of your customers sees which version of the test. If it sounds like too much, you can lean on their pre-designed templates to kickstart the split testing process.

- Optimizely. Not sure which hero image to use on your website? Which call to action to publish? Optimizely helps you test it all, providing an entire marketing platform to run both split tests and A/B tests.

- NPS. Tools like Qualtrics help you run your NPS (net promoter scores) at the split testing stage. NPS calculates answers to questions like: how likely are you to recommend this product to a friend or colleague? If you’re running massive split tests, you can use NPS as a guide to which experience is guiding customers to the most long-term product loyalty.

- Heat maps. If you receive enough traffic, heat maps can help you identify differences in split tests. You can also use the test results from these heat maps to identify the A/B variables you want to test next, including CTAs and headlines.

Chances are, one or more of these tools will help you diagnose the specific problems you’re having. You can integrate these tools into a new split test and then sit back and wait for the results. But don’t start that test until you’ve learned some best practices first.

Best practices for split testing

Remember: you’re essentially running a science experiment here. Any good science experiment requires controls and parameters to ensure the quality of your results. Here’s what you’ll have to keep in mind before the split test:

- Sample size, minimum detectable effect, and anticipated run time. We include these three variables because you’ll want to calculate them before starting your split test. Get a sense of how long this test will run, and how large a sample you’ll need before you’ll determine the results are definitive. Automation will take care of sample size for you—but only if you let it and don’t interrupt the process.

- Start big, then narrow down. If you’re starting out, now is not the time to test your call-to-action text just yet. A headline is important, sure, but A/B tests and multivariate tests will come later. Your first mission should be to identify the asset or style that resonates with your customers.

- Watch out for contamination due to testing procedures. For example, if the “control” page is being tested against a variation, it’s not a fair test to just implement a redirect for 50% of the traffic to the new variation. This redirect can cause issues like page flicker, longer page load times, etc., that have nothing to do with the optimization efforts being tested. Instead, set your control up as a redirect so that both the control and the variation are redirects, or create the variation so that it doesn’t require a redirect (e.g., through server-side changes).

- Wait for your data. If data-driven decisions are your goal, don’t shut down a split test when you like the results. This is why you should sit down and determine the test’s schedule before you launch. You need a scientific approach here: remove your biases and wait for the test to run its course.

At this point, you probably have a handle on the tools you want to use, the questions you want to answer, and what a split test can tell you. There’s just one remaining variable to separate from the equation: you.

Split testing takes time to build up a large sample size that will give you statistically significant data. That means, it requires some patience. Set your test and then step away and avoid stemming any decisions from the data until it’s finished.

What if a split test doesn’t work?

Let’s say you’ve run the test after completing all the steps above. You’ve been careful about selecting the assets to test and about the structure of your test. You’ve allowed a large sample size to develop.

Then, when it comes time to review the results, customers seem split. You have approximately a 50/50 balance between the two versions of your asset.

Was it all a waste of time? Not necessarily. Consider the example of Steven Macdonald, who once ran an A/B test and noticed zero influence on his conversion rates.

In fact, he learned, a test like this does provide you data: it tells you that the messaging you just tested doesn’t have a major impact on customers.

If your tests don’t show you any statistically significant differences, you might conclude one of two things:

- You need to “zoom out” and rethink your hypothesis. Your hypothesis might have been, for example, that a website focusing on one of your products would make more sales than one that focused on another product. Did it? If not, don’t be disappointed. Even disproving a hypothesis represents a key insight you can sometimes use to discover what your customers prefer.

- You need to “zoom in” and test new hypotheses. Maybe the statistical insignificance is a sign that it’s not your overall asset that needs testing. Maybe you’re ready to drill down to A/B tests and optimize individual components.

If it’s the latter, prepare to go granular. You’ve established the baseline, and now it’s time to dive into the details. That’s what Macdonald did, adhering to the principle of “constant improvement.” Macdonald says:

“You cannot expect to replicate these results, but what you can replicate is the continuous optimization of testing winning variations against newer variations based on the data you collect. Just because you run one A/B test, doesn’t mean it’s over.”

In other words, take on a “continuous optimization” mindset. Just because you ran one test and found little statistical variance doesn’t mean you’re done testing. Conversion rate optimization (CRO) isn’t always done with just one test. Now it’s time to zoom in and run a new test.

What if you need to start A/B testing?

To run an effective A/B test, we need to start with a similar question: what are you testing? What makes something “testable” in the first place? For an A/B test, you’ll need to examine elements that are:

- Repeatable. In other words, you can A/B test different call-to-action buttons because you know customers will keep viewing and clicking those buttons over time. You can also repeat tests for specific variables, such as the button colors on Version B, Version C, and so on.

- Significant. Before you test how good the borders on your checkout page look, you may want to consider the significant variables that matter: pricing, CTA elements, testimonials, and headlines. Testing works, but only if you test the elements that drive customer impact and drive your user experience.

- Measurable. What are the metrics you want to aim for? For example, does a larger CTA increase traffic to your purchase page via a higher click-through rate? Great. But what if those new clicks represent a lower percentage of conversions into buyers? Ask yourself the desired result of your A/B test before you sit down to optimize.

Some of the most common elements to test include headlines, headline positioning, colors, page copy length, sign-up workflows, checkout processes, pricing, and email subject lines. You aren’t limited to these elements, but that should give you an idea of what kind of variables meet the criteria above.

Finally, employ the same approach as you did for split testing. By now, you should have some familiarity with the tools possible, such as Optimizely. Many of these tools have features for A/B testing. Get familiar with the platforms and consider testing new colors, new copy, new pricing, and more.

Continue to engage the best practices here: large sample sizes, and predefined schedules to ensure you stick to a test to give each variation a chance to prove itself.

Top Tip: Everything’s easier with a proven platform to help you along. Learn more about how to turn customer data into great marketing decisions 🐼

Key takeaways

Marketing decisions should be data-driven decisions. Marketers need to understand the buttons or features that keep conversion rates down or drive them up. With split testing, you’ll abandon the “shot-in-the-dark” mentality and take a scientific approach to figuring out what your customers want.

You might not be wearing a lab coat when you run a split test, but your findings will still be impactful. Remember to stick to your plan, gather a large sample size, and let the data show you where your marketing needs to go.

READY TO PROVIDE A BETTER POST-CLICK EXPERIENCE?

Get insights and tips to drive more business from less ad spend, more profit from less cost, and more customer value from less churn.