19

optimization campaigns

71%

of tests outperformed baselines

~$404,000

revenue protected (one test)

INTRODUCTION

Jessica Magyar’s ecommerce team at Holiday Inn Club Vacations was under pressure to grow online sales.

Everyone had ideas about what needed to change within the site and email flows, including updating CTAs, tweaking copy, and adding new content.

But with no testing framework, Jessica risked wasting resources and her reputation on costly distractions that might hurt performance.

So, she brought in Smart Panda Labs to build a post-click experience (PCX) experimentation program, confidently prioritize digital changes, and drive purchases.

ABOUT THE CLIENT

Holiday Inn Club Vacations is a resort, real estate, and travel company offering vacation experiences across the US.

Known for its family-friendly destinations and timeshare offerings, the company operates a large digital commerce presence to serve both prospective buyers and existing members.

THE CHALLENGE

Jessica needed to turn guesswork into tangible improvements

Jessica’s team couldn’t afford to keep making gut-based decisions or guessing what would improve the customer experience (CX) for such high-consideration purchases.

With dozens of competing opinions from across the business—each requiring design, copy, and development time—she needed a way to separate impactful opportunities from damaging changes.

Every decision would be visible to leadership, and the wrong call could cost revenue and the chance of future promotions.

Jessica needed data, clarity, and a partner who understood how to navigate these big-ticket purchase journeys. She needed Smart Panda Labs.

“The stakes were high. This was an idea I was bringing to the company, so I wanted to make sure I put my best foot forward and we hit it out of the park. I don’t want to suggest a program that won’t be successful. That wouldn’t be great for the reputation I was building.”

THE SOLUTION

Launching a people-driven experimentation program

Jessica envisioned a testing program that would become a repeatable growth engine—one that improved conversions, reduced risk, and reshaped organizational decision-making.

Having worked with Smart Panda Labs before, she trusted us to move fast, ask the right questions, and help her make a strong case internally.

The process involved:

- Choosing the right experimentation tools

- Prioritizing tests that would make the biggest impact

- Using the insights to guide strategy and protect revenue

Here’s how we turned digital guesswork into a scalable, people-driven decision engine:

1. Choosing the right technology for fast, accurate results

Before any testing, Jessica needed a platform to integrate with HICV’s existing martech stack and support sophisticated, scalable testing.

Together, we quickly evaluated the top experimentation tools and settled on the flexible, easy-to-use Optimizely. Then, we set up the technical infrastructure and collaborated to develop a testing roadmap aligned with business priorities (i.e., to increase earnings).

Jessica wanted a repeatable process that her team could use to validate ideas and drive incremental revenue.

By taking time to set things up properly, she could measure quickly and accurately to get early buy-in.

2. Running tests to improve the entire PCX

Jessica then tasked us with designing and executing experiments that would meaningfully improve HICV’s post-click experience—from the moment users landed on the site to final checkout.

Together, we mapped out 19 testing campaigns during our initial engagement and launched 14. By prioritizing each based on potential impact and effort, Jessica’s team could focus limited resources where they’d count most.

Early tests explored two main avenues to encourage more high-consideration purchases:

- Personalization opportunities like geo-targeted homepage content to help more visitors take the first step toward booking

- UX improvements (e.g., redesigning key widgets and streamlining processes to remove friction) so users could easily navigate the site and commit to buying

Some tests confirmed the HICV team’s bold new ideas. Others (like changing links from text to buttons in the screenshot above) proved that popular internal suggestions would have made things worse—saving time, money, and dev hours.

3. Building a culture of trust and data-driven decisions

As meaningful insights rolled in, the impact reached far beyond conversion rates. Jessica used early wins (and a few surprising losses) to shift internal conversations from opinions and assumptions to data and outcomes.

Once skeptical executives became supporters, colleagues pushing for untested changes began to see the value in pausing to validate first.

The program improved performance and created alignment simultaneously. And by helping the team efficiently find effective solutions, Jessica solidified her reputation as a capable and reliable leader.

With our ongoing reporting and guidance, Jessica built trust across departments and used every experiment as a case study for more thoughtful decision-making.

“There was one button or verbiage change that everyone wanted to make. But we tested it first and realized the results would have been bad. So we could say, ‘This thing that everyone thinks we need to do is actually going to hurt our program.’ So we’ve got something wrong. That was one instance that really got executive approval.”

THE RESULTS

Out of 14 experiments, 85% delivered actionable results

70%

top-of-funnel conversion boost

21%

mid-funnel conversion increase

62%

conversion drop prevented

Jessica increased conversions at critical points in the buying journey—all while earning executive trust and laying the groundwork for long-term growth.

From personalization to UX optimization, each A/B test either improved performance or validated whether a proposed update was worth the investment.

And Holiday Inn Club Vacations’ results spoke for themselves:

- Around 85% of campaigns produced statistically significant results, giving the team more confidence when making decisions

- Some 71% of test variations outperformed baselines, confirming that most prioritizations had a measurable impact

- The baseline won in 14% of cases, helping the team avoid costly mistakes by catching underperforming changes early

The most impactful experiments focused on experience optimization:

- A homepage personalization test increased top-of-funnel conversions by 70%, helping more users enter the booking experience

- A redesigned package selection widget improved mid-funnel conversions by 21%

- A highly assured CTA update would have dropped conversions by 62%, avoiding a rollout that could have cost HICV hundreds of thousands in lost bookings

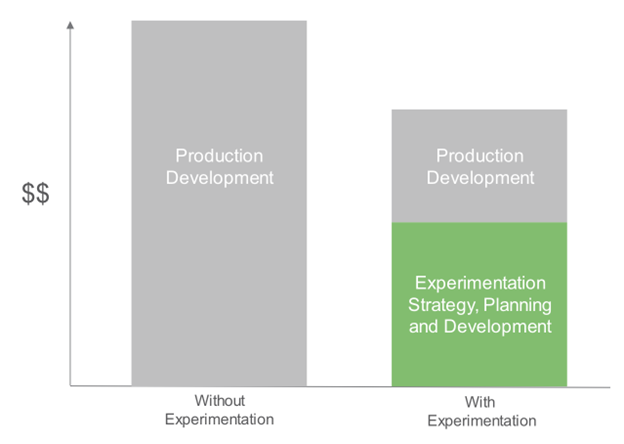

By testing ideas before committing to full development, Jessica also reduced unnecessary production costs and freed up resources.

Beyond the numbers, the program delivered lasting organizational impact.

Executives gained confidence in data, and what began as a pilot evolved into a permanent capability—now wholly owned and operated by HICV’s internal team.

Jessica’s success leading the program elevated her profile internally, and she was quickly promoted to Senior Manager of Performance Marketing and Innovation.

Her long-lasting relationship with Smart Panda Labs continues to influence how she collaborates with digital teams, even today in her partnerships role at Hilton Grand Vacations.

“Smart Panda Labs are dedicated, loyal, and trustworthy. If they say they’re going to deliver, they’ll deliver. They’re not all talk. Sometimes with vendors, you bring them on and it all sounds great in the pitch and presentations, but then you get a different or more junior team.

Because SPL are this tight-knit group of professionals, you have that confidence and trust they’ll do their best for you.”